Use base8-lion (full 1 epoch on 1.48M imgs)

to do the merge.

A SDXL anime base model aims to create unique artworks.

Not designed for reproducing copyright characters or artist style

This model can be seen as a derivative of Animagine XL 3.0 project.

Basically I'm collaborating with Linaqruf for making better Anime base model (and it is obvious that we have different goal/target)

We share our models and technique to improve our models' quality.

And that is also how this model been created.

Kohaku-XL base7 is resumed from beta7 and use same dataset that beta series have used. But this time I use my own metadata system to create captions. (Can be taken as advanced version of what linaqruf used, will open source it soon)

The metadata database can be downloaded here:

KBlueLeaf/danbooru2023-sqlite · Datasets at Hugging Face

Trainin details:

LR: 8e-6/2e-6

Scheduler: constant with warmup

Batch size: 128 (batch size 4 * grad acc 16 * gpu count 2)

Kohaku-XL base8 is as same as base7 but use 5e-6/1e-6 LR, and it have a full epoch on 1.48M images.

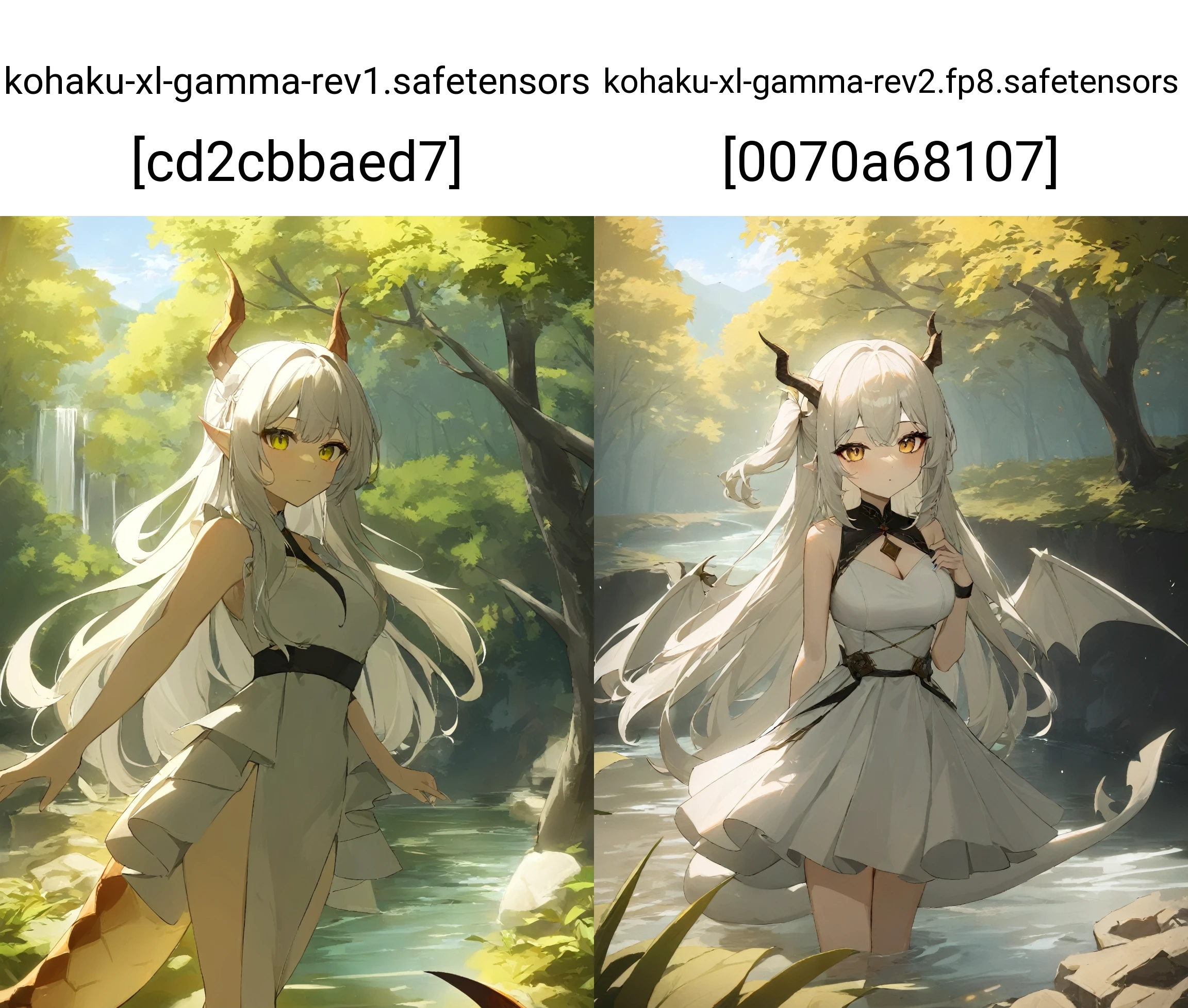

Kohaku-XL Gamma rev1 is a merged model which combine the learned diff from anxl3 and kohaku xl base 7. With this forumla:

gamma rev1 = beta7 + 0.8 * (anxl3 - anxl2) + 0.5 * (base7 - beta7)Kohaku-XL gamma rev2 is merged with this equation:

gamma rev2 = beta7 + 1.0 * (anxl3 - anxl2) + 0.25 * (base8 - beta7)I also use a MBW recipe to merge with Anxl3 directly:

0,0.1,0.1,0,0.1,0.1,0,0.1,0.1,0,0,0,0,0,0.05,0.05,0.05,0.05,0.05,0.05First of all, (anxl3 - anxl2) can be take as "What Anxl3 have learned from the training resumed from anxl2". And since the "learned things" are basically "meta tags" and some character/style information, it is good to merge the diff from anxl3/anxl2.

Or you can take this merge as "merged with 2 lora/lycoris model, one is extracted from anxl3 and another is extracted from base7/8"

The very low weight MBW merge is to fix some over-trained artifact in the merged model.

After safetensors 0.4.2 or pytorch 2.2.0, users can use fp8 to save their model into safetensors or pytorch ckpt. And I also upload a fp8 version of the model in zip, you can ????? it and drag the fp8 safetensors into your sd model folder and use it normally.

Remember to update your safetensors to 0.4.2.

More information of FP8+FP16 inference:

A big improvement for dtype casting system with fp8 storage type and manual cast by KohakuBlueleaf · Pull Request #14031 · AUTOMATIC1111/stable-diffusion-webui (github.com)

This model is trained under 768x1024 to 1024x1024 ARB. It is recommended to use pixel count within 786432 ~ 1310720.

Recommended CFG scale is 4~7.

Sampler should not matter.

This model use my own system for quality tags or something like that.

So although this model combine the diff weight from anxl3, I will still recommend user to use mine (or both) tagging system.

This model is trained with artists' name as a tag so you can use artists tag as "stylize tag". But since my training config is not designed for learning style. I don't think you can actually reproduce the style of any specific artists. Just use artists tag as style refiner tag.

For character tags, as same as artists. The model can accept character tags but I won't ensure its ability on reproducing any character.

The format of prompt is as same as anxl3. (You can check the sample images I post)

Rating tags:

General: safe

Sensitive: sensitive

Questionable: nsfw

Explicit: explicit, nsfw

Quality tags (Better to worse):

Masterpiece

best quality

great quality

good quality

normal quality

low quality

worst quality

Year tags (New to Old):

newest

recent

mid

early

old

You may meet some subtle mosaic-like artifact, that may be caused by high-lr or bad resizing/image encoding.

I will try to fix it in next version. For now, try to use R-ESRGAN anime6b or SCUNet models for fixing it.

Since my dataset have some resize/webp artifacts that will harm the models. I will recreate my dataset based on my new system (and opensource it once I done it).

The next plan is to train model on larger (3M~6M) dataset with better configuration (which will require A100s and I plan to spend about 2000~10000 USD on it, if you like my works, consider to sponsor me via buy-me-a-coffee or some BTC-sutff, you can get the link from my github profile: KohakuBlueleaf (Kohaku-Blueleaf) (github.com))

When I try to generate some sample images, I found my merge method actually goes wrong at first (due to some bugs in LyCORIS' utils).

Although the final version of rev1 is not a bugged one, some of sample images I post is actually generated by it.

I will upload the bugged one and base7 onto my Huggingface:

KBlueLeaf (Shih-Ying Yeh) (huggingface.co)

If you want to follow up the progress of next version (or some other projects)

Checkout my homepage:

Kohaku's Homepage (kblueleaf.net)

Important

This model is licensed under faipl-1.0-sd just like anxl3:

Freedom of Development (freedevproject.org)

Use base8-lion (full 1 epoch on 1.48M imgs)

to do the merge.

1. 재게시된 모델의 권리는 원 제작자에게 있습니다.

2. 모델 원작자가 모델을 인증받으려면 공식 채널을 통해 SeaArt.AI 직원에게 문의하세요. 저희는 모든 창작자의 권리를 보호하기 위해 노력합니다. 인증하러 이동