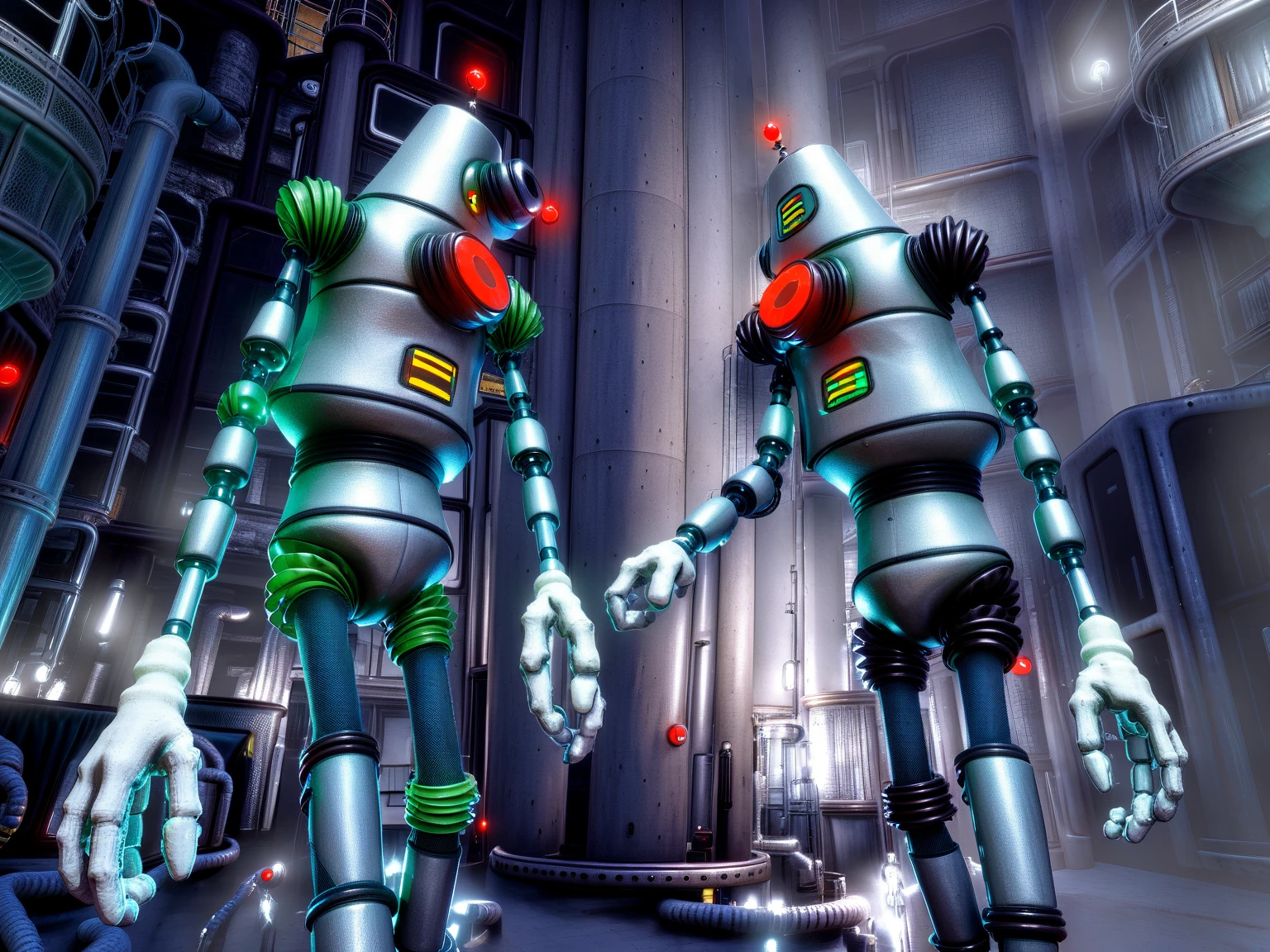

This is Style slice We happy few B-LORA

B-LORA sliced twice, Content (composition) and Style. I was bored AF and cooked some ComfyUI workflow, which has proven to be extremely efficient and flexible. I think there is no better way for style transfer. Separate Lora load for composition and style, samplers latents decoding vae and straight into IPAdapter Style & Composition SDXL, than sampling and (only if you megalomaniac like me) applying third Lora, which is trained separately with Dreambooth method (trigger + class). I will share ComfyUI workflow, make sure you know how to rename clip vision and ip adapter models, cause i use Unified loader for IP adapter.

Ofcourse, each model can be used separately and independently.

All captions used for training + comfyUI workflows here:

https://drive.google.com/drive/folders/1FFS4CnX3RI4B1yhzwqrlEwLd_QGpuYCZ?usp=sharing

This is Style slice We happy few B-LORA

1. The rights to reposted models belong to original creators.

2. Original creators should contact SeaArt.AI staff through official channels to claim their models. We are committed to protecting every creator's rights. Click to Claim