[Reasons for Failure in "Previous Camera Angles"]

* Wan2.1 can strongly react to character movements, but its reaction to overall movements, including the background, is weak.

* When multiple actions are described in a single prompt, even with words indicating sequence, the "tendency to strongly react to the word at the beginning" pulls the result towards the first action.

So, what can we do? There are many solutions if we use difficult techniques, or simply avoid using Wan2.1 altogether.

However, I wanted to find a way to achieve this "as easily as possible" using "Wan2.1, a model with excellent realism and expressiveness."

One answer to this is the topic of this article: "Create as many scenes as the number of angles you want to move."

(Hunyuan has better prompt responsiveness, but Wan2.1 is stronger in terms of realistic texture and visual expression.)

Warning: This article is mainly for VIP account holders.

However, it may provide an opportunity to learn about prompt creation

and its background,

so please read on if you are interested.

[Method]

First, the methodology. (Assuming 3 camera movements)

* Prepare 3 positive prompts.

* Use one negative prompt for all 3 videos.

* Create 3 videos and merge them at the end.

It's that simple. Let's try to workflow this.

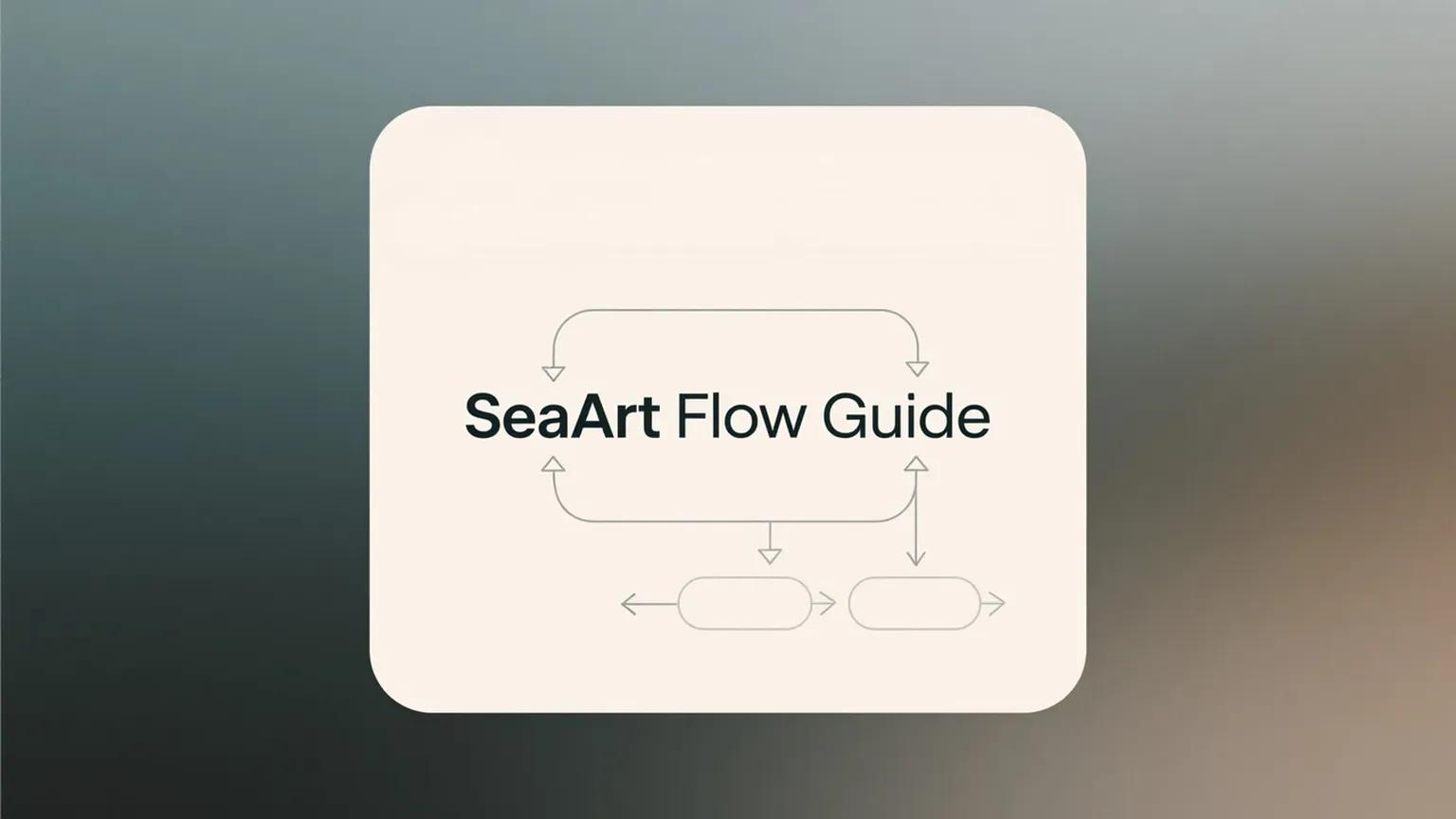

Regarding the workflow, I'll use the simplest possible nodes to focus VRAM on camera movement.

* Nodes Used:

DiffusersLoader (Set the official model for video)

LoadClip (Set the official model for video)

LoadVAE (Set the official model for video)

EmptyHunyuanLatentVideo (Specify video size and length)

CLIPTextEncode(Positive)*3 (Prompts for 3 videos)

CLIPTextEncode(Negative) (Shared for 3 videos)

KSampler*3 (Create 3 videos - remember to unify and fix the seed)

VAEDecoder*3 (Auxiliary for creating 3 videos)

MakeImageBatch (Merge 3 videos)

VHS_VideoCombine (Set up to save, post, and save as MP4 as a final product)

(SaveAnimatedWEBP) (As needed for posting, etc.)

Arrange the above as shown in the diagram below to complete the workflow.

Fine-tune the settings as you like, or leave them at the default. (Except for "EmptyHunyuanLatentVideo")

The settings in the diagram below are my personal recommendations.

This is the app version: 3-split connection Text to Video - T2V - (1.2)

[Points to Note]

Frankly, creating the workflow itself is simple as described above, but there are some small points to note.

And that's what makes Wan2.1 troublesome.

> Workflow

* Consistency of each video: Reduce randomness in scenes and the appearance of the main character.

→ Fix the seed value of each video to the same value (Fixed mode).

(You can stabilize the characteristics by inserting a specific LoRa. However, versatility will be reduced.)

* Video length settings: The length value of "EmptyHunyuanLatentVideo" is the length of one video, so set it to 1/3 of the final planned length.

→ e.g., A video with FP20 has 20 images per second, so a 6-second video has a length of 121.

This time, we will prepare three 2-second videos, so the length value for one video is 41.

→ The load on the KSampler is also distributed among the three,

which improves generation speed and is also a technique for generating long videos.

However, longer videos can lead to the generated video collapsing due to exceeding the size

during composition, so be careful.

> Prompts

* Consistency of each video: Blurring of the main character is especially fatal.

→ One technique is to give the character a few prominent features and describe them in all three prompts to make subtle differences less noticeable.

(e.g., "Beautiful Japanese woman with short hair, wearing glasses and a tight black suit")

Also, note that the prompts are independent for each segment. Pronouns corresponding to the first segment will not be recognized in the second segment or later.

* Focus on camera movement: Use prompts that exclude as much as possible other than camera movement.

→ Leave the fine modifiers to the AI's judgment.

* Grasp the characteristics of Wan2.1: Wan2.1 has weak recognition of sentences related to camera movement.

→ It is essential to place it at the beginning of the prompt, avoid "weak" words such as "slowly" and "gradually," and avoid putting multiple actions in one segment.

→ Examples of effective camera work sentences:

* Turn around from above: A top-down camera path circles around the character, following a fixed radius.

* Wrap around: The camera travels along a circular path around the (character),

* View diagonally from above: The camera descends from a high angle to shoulder level,

* Back to side: The camera shifts ? to a side profile view,

* Rotate around : The camera orbits around the character at a consistent distance,

Note: The effect varies depending on where you insert it, so try different locations.

In some cases, it may be more effective to place it behind the character representation.

→ Examples of bad pattern:

* The environment pans

* The background shifts from front to side

* The scene slowly rotates

[Result]

Although not dramatic, the result was a video that looked like a specific character was being filmed with three cameras, each moving in its own way.

[Finally]

Based on this flow configuration, "I2V_3 Scene Combination," which prepares the starting images for the three videos, also looks interesting.

Of course, if you introduce "controlnet" for the character's movement that we put aside this time, you can expect even more desired visuals.

However, the more elaborate you make it, the more generation time and allocated VRAM capacity will increase, so if you generate with SeaArt, you will have to sacrifice something.

Finding the balance is one way to enjoy SeaArt's "challenge to the limits within a restricted environment."

Or rather, I'll conclude this article by stating that this is "the fun point."